Nowadays, more and more cars embed a Head Up Display (HUD), device integrated inside the dashboard and behind the cluster, which generates a virtual image seen in superposition on the road and displays relevant information to the driver, like speed, GPS data… This equipment has proved in few years to bring convenience in the driving experience, as well as safety as it increases the time the driver spends watching the road, and not looking for information in the cluster.

Already on the rise, the next generation of HUD will integrate Augmented Reality (AR) concepts, where the virtual content will be even more linked to the real scene, like highlighting elements on the roads (pedestrian, traffic lights…) or interacting with it by coloring roads to be taken with GPS assistance. Despite looking simple, these differences increase the complexity of design and requirements on HUD system.

And on the verge to this new technology, the question is often asked to the usefulness of such concepts in the autonomous car environment. We will see that at the contrary of the usual opinion, Augmented Reality appears more and more as a great asset for the future of autonomous driving.

HUD and AR HUD: how it works and what are the main differences?

The current HUDs in the automotive market display a virtual image on the road just above the hood of the car and provide simple but key information to the driver. A typical Human Machine Interface (HMI) provides status of speed of the car, the gear used, GPS information, fuel gauge… The main goal is to increase the attention of the driver on the road while giving him/her more easily the status of the car without the need to watch information on the cluster or any screens in the central column.

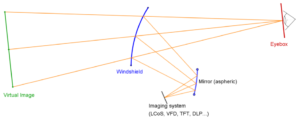

A HUD is composed of an imager that generates an image (e.g. VFD, TFT, DLP…), and an optical part (a mirror in vast majority of systems), that will conjugate and project the image to a given distance. The projected image is said to be virtual, as it is seen by the driver as “floating in the air”.

The following schematic shows the key parts of any windshield HUD: imager, freeform mirror and windshield:

The optical systems, composed by complex shape surfaces, is designed in such a way that the orientation of the rays at the exit of the last optical surface (windshield in most cases) will force the eyes to accommodate on an image, placed at a long distance, hence the definition of virtual image. The virtual image could only be seen in an area called eyebox, limited due to different constrains (size of mirrors, size of system, requirement on brightness of the system…). The typical size of an eyebox is at least 130mmx60mm and is moved in height depending on the size of the driver (movement of ±60mm in most design).

But right now, those pieces of information are related to status of the vehicle or its close vicinity, e.g. proximity of an object in the blind spot area, but are not directly linked to the environment. So, a natural evolution of such a concept would be to correlate the virtual display with the real scene and the results of this fusion will give a direct and seamless information to the driver. Several examples come to mind to any reader:

- highlight the road to be taken in relation with GPS with vibrant colors,

- frame in real time a pedestrian crossing the street,

- detect and display road signs or traffic lights,

- write content in relation with the environment named roads at a crossroad, possible parking lot, restaurants reviews…

The figure below shows an artistic view of such concepts:

This correlation to the real scene with a virtual image is often referred in the press or in the literature as Augmented Reality. Despite that the term of Augmented Reality could cover a larger span of applications depending on the fields (e.g. ref to “Le traité de la réalité virtuelle”, volume 1 – 5, Presses des Mines), the definition in the automotive field is rather straightforward: Display to the driver a virtual content superposed on the real scene, in real time.

But the evolution from the day to day HUD, equipped in an increasing number of cars, to a truly augmented reality experience, is not just an upscaling from an existing technology. Some requirements are deeply redefined in the process and four of those main requirements will be exposed here:

- Projection distance,

- Field of View (FOV),

- image position in space,

- latency.

Projection distance

The projection distance is the distance between the driver’s eyes and the position of the virtual image. From another point of view, it’s the distance perceived by the driver to where the image is.

This distance is chosen and fixed for each design, as it is still difficult both technically and financially to have a dynamic projection distance, for a mass production device.

In a classical HUD design, ergonomic clues and feedback have suggested that the virtual image for a classical use should stay in the vicinity of the car. That’s why the virtual image is often put at the front end of the car’s hood. In the vast majority of cases, it is set to 2m, but a range between 1.5m to 2.5 is also a safe assumption.

In an AR HUD design, this virtual image is put at a long distance in order to be better superposed the virtual content to the location of the actual cases, like highlighting cars, pedestrians, road signs… It is then chosen on average or depending on the use cases, and acceptable values could vary from 5m to 15m and more. This modification contributes to largely increase the complexity of the optic and mechanic (optomechanic): more power mirrors, higher sensibility in the assembly, increase in volume and weight…

Field of view

The size of the image is given by an angular description. The driver sees an image through 2 directions, i.e. the vertical Field Of View (FOV) and the horizontal FOV (see figure before).

In the recent HUD world (end of 2017), the values are around 6°x2° (Horizontal x Vertical) and are evolving to 7°x2°, or even greater (the last Lincoln Continental has a 10°x2.5° at 2m projection distance). The vertical dimension is at the moment restricted by the current design of volume available under the dashboard and behind the cluster. To give an idea of the evolution of the image sizes, in 2014, the HUD displayed FOV of around 4.5°x2°, which will result a virtual image of 158mm x 70mm at 2m projection distance.

For AR, the real scene is enriched with virtual data and therefore be ideally at the FOV of the human eye (or at least 20°x10°, cf. K.Bark, C.Tran, K.Fujimura, V.Ng-Thow-Hing. Personal navi: benefits of an augmented reality navigational aid using see-thru 3D volumetric HUD. AUI conference. 2014).

At the moment, the requirement of the vast majority of use cases for an AR system is to have a FOV of 12°x4°. In the horizontal direction, the larger the FOV, the larger the portion of the road will be covered. In the vertical direction, it is directly linked to the portion of the road covered by the virtual image. In a 4°, the virtual image will cover the road from 15m to 80m. For even richer experience, it could be interested to highlight elements higher than the horizon line (e.g. road signs).

Image position

For any HUD, the driver needs to adjust the system to see a bright image. In the system itself, this adjustment is done through a kinematic system which will tilt a mechanical part of the system (majority of the time, it’s a mirror in the optical design). The consequence is that the virtual image will move according to this adjustment and it is seen higher on the road for a small driver and lower on the road for a tall driver. An analogy of a swing could be used: tall people need to receive the light coming from the system higher in the driver seat than smaller people and it forces the virtual image to go down. In the figure at the beginning showing a simplified HUD, the eyebox (on the right) of tall people needs to be moved upward (tall people are “higher” in the seat), and by consequence, the virtual image (on the left) moves downward. This aspect is not perceived by the drivers, as this adjustment is done once, and the virtual image has no connection with the road.

In an AR system, the image, or at least the virtual content, has to stay fixed in space. Any movement of the virtual image involves wrong coincidence with the real scene, which results to a different experience between a small and a tall driver.

To this regard, the image position in an AR HUD needs to be controlled, whereas it has never been an issue with traditional HUDs. This is a brand-new topic in regard with HUD design, such that an eyetracking system (or even an headtracking would be sufficient) could be mandatory in order to offer the best coincidence between virtual and reality contents while minimizing the error of superposition between these two, due to their difference of position in space (reminder: projection distance would be fixed at first). This later aspect is sometimes referred to parallaxes’ issues.

Latency

In virtual reality, in augmented reality or even in mixed reality with see-through glasses, the delay between the movement of the observer (head rotation, eye movement…) or the modification of the scene and the update of the virtual content in the virtual system must be extremely short. This delay is called latency.

Studies suggest a maximum threshold at 20ms in the case of augmented reality, and also advise to not go upper 7ms in mixed reality, in the risk to experiment discomfort, discontinued stress, headache, and at the end, a rejection of the system by the user.

Solutions to reduce latency are linked to the technology used and we don’t feel relevant to draw a long comprehensive list. The main idea is always to find tricks on the implementation and on the conception, in particular on the rendering engine or on the driver or on the hardware.

Cues to the advantages of AR in Autonomous Conditions

The autonomous car is clearly one of the biggest trend in the car world of the past couple of years, as seen in motor or consumer shows, and the potential of such a system is already tangible: increased safety and reduction in accident outcomes, traffic optimization with an increase of traffic flow, freeing the driver, and the list goes on and on.

But before being at this dream stage of a full autonomous car, the experience of autonomous driving is currently categorized in levels with different possibilities. From level 0, where the driver is warned by the car but is still in control, to level 3, where the majority of the action is taken by the car, to level 5, where human assistance is seen as optional. At the moment, the car will obviously still require some assistance of the driver to overcome unknown situations (around level 2 to 4), and is sometimes referred to as cooperative driving mode. The car executes some defined actions (accelerate or brake, turning, etc) but requires the assistance of the driver to take some decisions (choosing the best lane, handle some key maneuvers).

This is where Augmented Reality comes into play, as it will offer a set of tools for the driver to interact with the car, and for the car to communicate intelligible information to the driver.

During the project Localisation and Augmented Reality, studied at IRT SystemX, use cases were studied in simulator and on a car equipped with an AR HUD prototype to define the correct visual grammar on the relevant objects of the environment. The following pictures show the tools or rendering used to study the link between autonomous driving and AR system, or the use cases of AR systems.

During these studies, Augmented Reality appears to be a great asset to annotate the roads: highlight proximity cars or obstacles, and relative speed/distance to them, direction to be taken, key elements of the scene (pedestrians, traffic lights…).

On another level, it is not uncommon to hear concerns about the autonomous car, in particular on a trust level: lack of confidence of consumers, fear of inefficiency or accidents.

Again, a virtual image, collocated to the environment, will provide a complex and powerful communication pipe between the car and the driver. The different elements on the road, quoted before, will be highlighted by the AR system. The driver will know at any moment what the car understands about the environment and its vicinity and what decisions it is taking (merging in traffic, stopping because a danger has been identified…). The goal is to give the confidence to the driver that the car is able to handle the complexity of driving.

For all the reasons exposed, it appears to us that autonomous driving and augmented reality are antagonist is a false assumption. At first, augmented reality could be a great asset for autonomous car, for both early adoption and integration to cooperative driving.

Conclusion

After many years of attempt in different fields (avionic, military, consumer market, automotive), there are nowadays many converging features that make it possible to integrate an Augmented Reality system in a car and have a convincing experience. Indeed, on the technical part, the emerging market of HUD in car affords to use luminous imagers at reasonable prices, even if some technical feats still need to be challenged or overcome.

And on the software aspect, the smart detection devices, driven by the autonomous car market, are able of more and more recognition of objects in the driving scene.

The user testings of Augmented Reality’s use cases were done in IRT SystemX through different approaches:

- with a simulation cave (mockup cockpit with hemispheric projection screen),

- with real devices, as AR head-up display prototype in a car (BMW X5).

Simulation is a powerful tool that allows to establish the minimum and desired requirement of the applications, or to quickly debunk HMI ideas, behaviors of cars and drivers while controlling the experiments in safe conditions and having sharp elements to use and to compute (positions of simulated vehicles, working with low latency, etc). The studies conclude that AR HUD is a powerful system to pair with autonomous car, as it will help the driver to have a better understanding of the environment and to take over more easily the driving, when passing from autonomous to cooperative or non-autonomous mode.

Pierre Mermillod

Pierre Mermillod est ingénieur d'étude en charge d'une équipe innovation optique chez Valeo (division Interior Control) pour les domaines viseur tête haute et imagerie cockpit. Diplômé de l'Institut d'Optique Graduate School (2005), Pierre Mermillod a occupé des postes allant de l'étude à l'expertise technique, notamment dans les domaines de la défense et de l'automobile. Pendant deux ans, il a été associé au projet LRA de l'IRT SystemX comme ressource partielle, afin d'apporter une expertise technique sur les thèmes des systèmes optomécaniques et de la réalité augmentée.